LightCode

LightCode: Compiling LLM Inference for Photonic-Electronic Systems

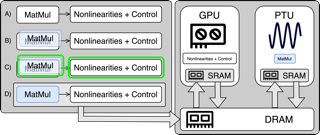

across the GPU and Photonic Tensor Unit (PTU). A) Graph partitioning,

B) Stack construction, C) Candidate selection, D) Scheduling.

The growing demand for low-latency, energy-efficient inference in large language models (LLMs) has catalyzed inter- est in heterogeneous architectures. While GPUs remain dominant, they are poorly suited for integration with emerg- ing domain-specific accelerators like the Photonic Tensor Units (PTUs), which offer low-power, high-throughput lin- ear computation. This motivates hybrid compilation strategies that combine photonic and electronic resources. We present LightCode, a compiler framework and simulator for mapping LLM inference workloads across hybrid pho- tonic-electronic systems. LightCode introduces the Stacked Graph, an intermediate representation that encodes mul- tiple hardware-specific realizations of each tensor operation. Hardware assignment is formulated as a constrained subgraph selection problem optimized for latency or energy under parametric cost models. We evaluate LightCode on the prefill stage of GPT-2 and Llama-7B showing that under our workload and hardware assumptions, (i) Photonic hardware reduced energy by up to 50 % in our simulated workloads at maximum sequence length; (ii) multiplexing and assignment strategy yielded latency improvements exceeding 10×; and (iii) Optimizing for latency or energy resulted in distinct hardware mappings in our simulations. LightCode offers a module, foundational framework and simulator for compiling LLMs to emerging photonic accelerators. https://arxiv.org/pdf/2509.16443?